Auto-Updating Copy | Automated Texts + Product Descriptions

Google launches generative AI in several countries. 5 Theses on LLM Search

Reading Time 18 mins | April 24, 2025 | Written by: Robert Weißgraeber

Last updated: April 2025

As the largest search engine and most important source of traffic, Google's policies are a very relevant part of the daily discussion for marketers and SEOs. With Google manager John Mueller's statement on "AI-generated content" and its impact on websites, the question of whether automatically generated content will be penalized by Google's webspam team comes up again and again.The short version in advance: The added value for the user determines whether or not Google penalizes websites with auto-generated text. Even the current wave of AI generation tools has no influence on this principle. Therefore, from an SEO point of view, it is no problem to publish texts generated with AX Semantics. Even in very large quantities, as is the case with automated product descriptions, as long as they meet the known quality standards.

Check out our ticker for the latest on automated content, Google, and what's new:

2024/2025: Google launches generative AI in several countries.

5 Theses on LLM Search, and why you already should be having a VP of GAIO/ LLMO / GEO

When we talk about LLM search, we are talking about the search intent that is queried against LLM-based products such as ChatGPT Search, Google SGE (aka AI Overviews), or Perplexity. (notable for being the first with a dedicated product search https://www.theverge.com/2024/11/18/24299574/perplexity-ai-search-engine-buy-products)

Typically, these search engines return a written summary of the topic, containing the facts and arguments relevant to the search intent, while citing sources and/or providing links for further reading. In addition to the content 'stored' in the LLM (in the text corpora on which they are trained), these products now also (live) crawl the web.

Recently, companies have noticed that the incoming web traffic for some of these tools has surpassed the traffic they get from some of the smaller established search engines, e.g. one company now gets more traffic from ChatGPT than from Bing.

So what's the takeaway here? 5 arguments I would like to make.

1. LLMs are perceived as helpful

Users perceive these tools as helpful. Otherwise they wouldn't use them. Of course, hallucinations are a problem, and some users may not be aware of this, but the overall experience is rated as net positive, otherwise use would not continue and overall adoption would drop. And while this is true, users still visit the citations for further study and details (or to check the LLM answer), otherwise we wouldn't see traffic sent to the sources.

2. Search results work in a different way, but the way of using it is similar.

The search engine result page (SERP) of established search engines such as Google, which we are used to seeing built from a list of links, is gone. (Actually, with many changes of the SERP in the last years, adding "feature snippets", various integrated other types of results (products, images, news...), it is long gone, since 2018, (The era of 10 blue links: https://zissou.pacific.co/blog/google-serp-history-upcoming-changes-and-their-impact-in-2024) But the way of using it is the same: the reader reads the result (studies the links and descriptions), clicks on the citations (formerly links), makes a follow-up question (formerly: adjusting the search query), and if they think they have their answer, they move on.

3. Adoption is exploding

If you're still using Google or Bing, don't take your own behaviour as an objective measure of LLM-based search usage. Taking over 16-year-old search engines like Bing is no small feat. Yes, Google has 20x more users than ChatGPT in EU searches (https://searchengineland.com/chatgpt-search-41-million-average-monthly-users-eu-454478), but with the advent of Google's AI overviews, the proportion of searches answered by an LLM will change enormously.

Listen to people around you, how many are using ChatGPT & Co as the first choice to look at for any question they have.

Even sceptical users are now admitting that they're starting to use LLM-based search more and more for certain usage scenarios, and not just for leisure, low-impact tasks, but also for day-to-day professional work. And as usual, younger cohorts are quicker to adopt new technologies…

4. Most searches are not in search engines, but will be in-context, in-app.

LMS-based response systems are being built right and left into every tool we use. This is changing the fundamentals of how we use the web. Instead of a journey starting in a "browser window home page" with the familiar simple Google box or Safari' search bar, users don't actively choose to search. They get their question answered "in context", in the application they are using (e.g. a developer sees answers based on the infamous stack overflow content while using his editor of choice, be it VS Code, emacs,..., - not by "googling"). (This will also change the way browsers are used: maybe we won't even consciously open browser windows anymore, but rather work (or play) in our everyday applications, and only get web content in modals or pop-ins attached to LLM answers?)

It's not that big a leap, other platforms like TikTok or Insta have already seen massive search volumes, now they can just actually return answers and not just ads and reels... What this means for paid links (e.g. Adwords, SEA in general), which have made Google the #1 global advertising company, remains to be seen.

5. The business model for search engines is being fundamentally challenged.

At first glance it is easy to see: where are the paid links? While in the era of the 10 blue links we had the top ones sold to the highest bidder, that's not so easy to replicate here, as LLMs need to retain their content-oriented viewpoint by highlighting the sources with the most differentiating information. and you don't get traffic for just inserting your valuable content, but only for the citations that are clicked, so why pay

We're already seeing other business models, such as paid subscriptions, that don't rely on the hidden sale of personal data, but can these incubating search engines replicate their success? - And that includes the whole martech space as a whole, where a significant ecosystem of vendors has been created over the last few decades, ranging from software vendors to service companies to whole departments in corporations.

On the other hand, the cost of an LLM search is much higher than a traditional search, which changes the underlying unit economics. What will survive after the VC and market share money has been spent is unknown.

The change is here. And it is fundamental.

Even if we don't see it in the "stock market data" yet, it has changed the internet forever: It has changed the inner workings of how different parts of the industry work together, from social media to browsers to advertising to business apps.

And it will change the traffic you get to your website.

The internet has changed.

Some things don't change.

And those things are fundamental. Even if the technology and the way you access it is completely different, it still has to serve the purpose that users want it to serve: Answer their questions, give them advice, send them somewhere... . So LLM-based search engines will have to focus on very similar things to the classic ones: determining "correct sources", weeding out spam, staying up to date. So the mechanics of page ranking, authority, penalties, crawling/indexing may be different now, but their original goal still applies. (For site owners, the same applies: to get indexed, they need to make their content accessible and crawlable (as with technical SEO), they need to create content that is relevant to the reader (not themselves) with valuable, unique and authoritative content.

Then they will get the traffic they want, if not they will have to pay for it. The only difference is that the links in the search results are now called citations, not links. And SEO must now be called GAIO, GEO or LLMO - the die has not yet been cast on what it will be called.

March 2024: Google Spam Update: "Scaled content abuse"

Is Google fighting against AI texts NOW? The answer is clear: No! Google is merely reinforcing its already well-known plan to promote high-quality, helpful content. To this end, Google is trying to prevent masses of spammy content that is merely aimed at influencing rankings. Explicitly described are measures against: "generate low-quality or unoriginal content at scale with the goal of manipulating search rankings".

Google therefore does not penalize AI texts per se, but texts that do not contain any added value for users. Content managers have the task of utilizing advanced AI technologies not only for the sake of efficiency, but also to exploit their potential for high-quality content.

The development in text creation increasingly resembles advanced production, in which automation tools with integrated AI applications such as ChatGPT and DeepL are crucial for increasing not only the quantity, but above all the quality of content. This "intellectual revolution", comparable to the industrial revolution in terms of production processes, enables an innovative approach to text creation.

It is crucial not to misuse AI to manipulate search rankings by creating quantitative, qualitatively arbitrary content. As is actually the case when generative AI drafts (GPT-generated drafts) are published without any editing. Instead, content should be enhanced through in-depth expertise, timeliness and adaptability to different media and platforms. Automation makes it possible to keep content continuously up to date and precisely tailored to the needs of the target group.

The focus is therefore not just on the quantity of content, but rather on its quality and relevance. Comprehensive automation that goes beyond the mere creation of drafts is essential in order to fulfill these requirements and establish efficient and scalable text production processes.

In this new age of copywriting, it is possible for companies that cleverly use automation in combination with AI to stand out from the competition with high-quality, engaging content that is optimised for Google. Learn more.

August 2023: Google Changes Rich Search Results for How-tos and FAQs

It was unpleasant news for many SEOs: Google executes major changes to rich search results for how-tos and FAQs, as announced by the company itself. According to Google, these changes are intended to make search results more concise. Rich search results for FAQs will now only appear on trusted authority and health sites.

Instructions in rich search results will only be displayed on desktop devices, provided the mobile version of the site includes the appropriate markup. The changes affect the use of structured data on websites.

Search Console reports will reflect these changes, but will not affect the number of items covered. The changes will be rolled out globally from mid-August 2023, but website owners and SEOs should not panic. After all, FAQs have a positive impact on user experience and website usability. The rule of thumb is: Content should always be designed and created first and foremost for users.

June 2023: Google on New Policy about AI and E-E-A-T Content

There were several discussions at Google Search Central Live at Tokyo 2023, particularly around how Google is handling content generated by artificial intelligence (AI), as reported by Search Engine Journal. Here are the key takeaways:

- AI and Content Quality: Google doesn't distinguish between AI-generated content and human-generated content. The most important factor for Google is the quality of the content, regardless of its source.

- Labeling AI-Generated Content: Google itself does not label AI-generated content. However, the European Union is requesting social media companies to voluntarily label such content to counteract misinformation.

- Publisher Responsibility: While Google currently recommends publishers to label AI-generated images with IPTC image data metadata, they are not required to label AI-generated text content. Google is leaving it up to the publishers to decide whether it's better for the user experience to label such content.

- Reviewing AI Content: Google recommends having a human editor review AI-generated content before publishing. This applies to translated content as well.

- Natural Content Ranking: Google’s algorithms are based on human-generated content, and thus, they will prioritize and rank such "natural" content higher.

- AI Content and E-E-A-T (Expertise, Experience, Authoritativeness, and Trustworthiness): There are ongoing internal discussions within Google about how AI content fits within the E-E-A-T framework, given that AI cannot have personal experience or expertise in a topic. They will announce a policy once they arrive at a decision.

- Evolving AI Policies: Policies related to AI content are evolving due to the increasing availability of AI and concerns about its trustworthiness. Google advises publishers to maintain their focus on content quality during this transitional period.

March 2023: What is Bard? - A Short Introduction to Google’s Chatbot

- What is Bard and how does it work?

Bard is a conversational AI developed by Google and powered by a light version of LaMDA – Google’s large language model trained on texts and web data containing a total of 1.56 trillion words. Bard is able to generate text-based responses to prompts, summarize information from the internet, and supply links to relevant websites. It’s important to note that Bard is not a search engine, but rather a feature that aims to provide an interactive method for exploring topics and to help users investigate knowledge and explore a diverse range of opinions or perspectives.

- How was Bard trained?

In short, Bard was fed information from the web, which it converted to text. The responses were crowdsourced and evaluated using metrics such as meaningfulness, accuracy, and relevance to improve the chatbot's performance. However, it remains unclear what values and beliefs the crowdsourcing raters had, how they were selected, and what individual or subjective viewpoints, they might have had that might have influenced the evaluation of the given information.

- Why has Google created a chatbot?

Many see Bard as a response to OpenAI’s ChatGPT and think that the release of Bard was driven by the fear of falling behind in the technological race. However, Bard's launch suffered a setback in February 2023, when a demo showcasing the chatbot's capabilities contained a factual error, leading to a loss of confidence in Google's ability to navigate the AI era and a significant drop in market value for the company.

However, Google envisions the future of Bard as a feature in “search that distills complex information and multiple perspectives into easy-to-digest formats”, as Google wrote in their announcement of Bard. Also, Google has recently published research on making large language models cite their sources, which is likely to be crucial for information-seeking scenarios.

February 2023: "You can use AI to help you write better content"

You let a machine write your content or you got a fleet of copywriters? Well, Google does not care, as they confirmed in a statement on February 8. What is important is the helpfulness of the content - and that content is not produced in order to manipulate search results. Google writes:

"Google's ranking systems aim to reward original, high-quality content that demonstrates qualities of what we call E-E-A-T: expertise, experience, authoritativeness, and trustworthiness."

Google

The focus is solely on content quality rather than the way in which texts are produced. In this regard, Google specifically names AI-generated content and content automation:

"Automation has long been used to generate helpful content, such as sports scores, weather forecasts, and transcripts."

Google

AI, so Google, "has the ability to power new levels of expression and creativity, and to serve as a critical tool to help people create great content for the web."

February 2023: Google introducing Bard as an answer to ChatGPT

As some people and even SEO experts have declared that ChatGPT is going to be the death of the world’s largest search engine, Google released their answer to ChatGPT: Bard. The basis of Bard is LaMDA, which is Google's large-scale language model.

Sundar Pichai, CEO of Google and Alphabet, released a statement with the introduction of Bard. He describes it as "an experimental conversational AI service.”

As of now, Bard is only available to “trusted readers” but is supposed to be available to every user in just a few weeks. Google demonstrates how Bard will work in this depiction:

Like GPT-3 text generators as well as ChatGPT, Bard is relying on enormous amounts of text, available on the internet. This means that it draws information from the web to provide users with answers to their questions.

It remains to be seen how Bard will change Google, but also if it will drastically reduce informational search traffic for websites, like some predict.

January 2023: Google Reiterates Guidelines for AI Written Content

With the release of and hype about ChatGPT, Google has reiterated it’s guideline. But it was the financial services company Bankrate that provoked Google to reiterate their guidelines, as seoroundtable.com reports. Why? Bankrate had been using AI for their content production. And they have been very successful with it in search engines like Google. It’s been going so well that even Sistrix analyzed the high rankings of Bankrate’s AI content.

And Google felt compelled to make a statement about their automated content guideline:

Furthermore, Google clarified that automated content is not an issue per se:

“As said before when asked about AI, content created primarily for search engine rankings, however it is done, is against our guidance. If content is helpful & created for people first, that's not an issue.”

Google

How does Google evaluate automated content?

There was some excitement as Google Executive John Müller was asked about Google's view on AI-generated content during a Hangout at the beginning ofApril 2022. He stated:

"We consider AI-generated content to be a breach of webmaster guidelines."

Google Executive John Müller

This was often understood as a general rejection of automated content. But the Google Webmaster Guidelines clearly show: Google's attitude towards automated content remains unchanged.

Google Webmaster Guidelines: Punishment for manipulative intent

The Webmaster Guidelines still state that automated content is a problem if it has a manipulative intent and is not created to add value for users.

"If search rankings are meant to be manipulated, and content is not meant to help users, Google can take actions regarding that content."

The actions meant here are manual and require a human review, not automated ranking penalties.

Poor content and spam are the problem, not automation itself

At this point, therefore, Google has no intention of using technical methods for recognizing automated content. Instead, the search engine uses the content and formal spam criteria for this type of content, as it does for handwritten texts.

It would be technically possible to identify some content generated by large language models, but not content generated by data-to-text solutions such as AX Semantics. Only the traditional spam indicators, such as poor linguistic quality or content-free speech junk, could be detected in this case. For content generated with an emphasis on user value, such as product descriptions or automated news and weather texts, Google does not penalize the content. Also, Google tolerates the simultaneous publication of high volumes of content, so long as it is not spam.

| Which automated content is considered suspicious by Google? Source: Google Webmaster Guidelines Automated Texts - Pointless text, in which keywords are distributed. - Automated translated text without validation or underlying set of rules. - Very simple automated text based on Markov chains or using synonymization or concealment techniques. - Content compiled from different web pages without sufficient added value. |

The reason for the current discussion: AI hype due to ChatGPT and GPT-3 tools that do not have a rule-based approach

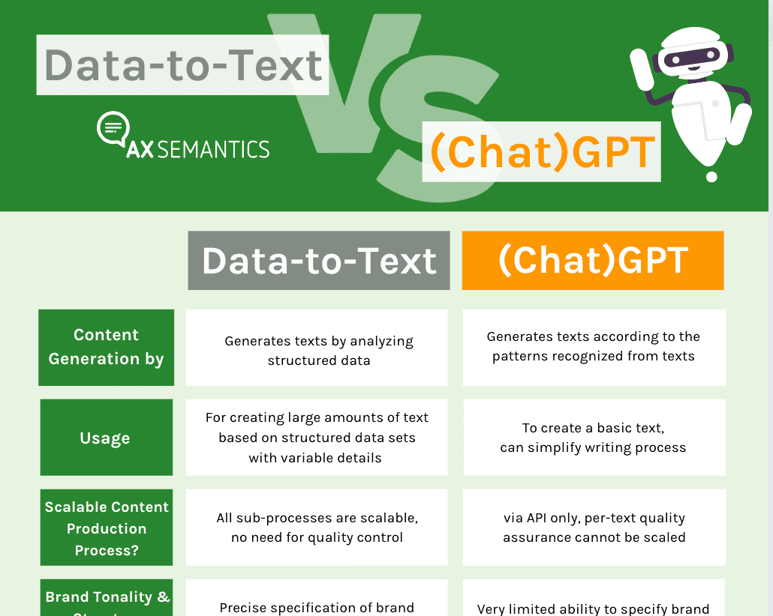

The current discussion is due to the widespread use of ChatGPT and GPT3 tools. Once again, it is important to point out the difference between GPT and the data-to-text approach of AX Semantics.

SEO, Data-to-Text, and GPT

SEO Expert Miranda Miller from Search Engine Journal, points out quite rightly that there are a lot of established AI content projects that have great content (and rank well on Google with it):

"The Associated Press started using AI to generate news in 2014. Using AI in content creation is nothing new, and the most important factor here is using it smartly."

SEO Expert Miranda Miller from Search Engine Journal

But which software is more reliable as regards SEO: data-to-text or GPT?

- The content created with data-to-text is based on data and data interpretations, rather than meaningless generalizations. The content meaning - and therefore the user value for Google - is determined by users (via logics, stories and triggers). So there are semantics in the texts, not just correct syntax.

- In data-to-text software, users review every decision made by AI components in the system. So they are involved in all decisive phases of the content generation.

- Some software can determine whether a text is based on large language models, but there is no technical way to identify data-to-text content.

FAQ

What is content automation?

Automated content generation with AX Semantics works with the help from Natural Language Generation (NLG) - a technology that generates high-quality and unique content on the basis of structured data that can't be distinguished from manually written content. Typical uses for text automation are product descriptions, category content, financial or sport reports or content for search engines websites - in a nutshell, all kinds of content that require large quantities and have a similar fundamental structure.

Structured data is data that adheres to a pre-defined data model and is therefore easy to analyze. Structured data conforms to a well designed pattern, for example a table showing connections between the different rows and columns. Common examples of structured data are Excel files or SQL databases. Structured data is an important basis for automated content generation. For product descriptions in e-commerce, for example, these are often available in a shop system, product information system (PIM) or in the shop.

Numerous AX Semantics customers results show that automated content is worthwhile when it comes to SEO. The e-commerce company MYTHERESA increased its visibility by 80% for relevant keywords within 6 months after beginning to use automated content. KitchenAdvisor is another example. They also registered a 0.7 to 1 increase in Sistrix visibility within 3 months.

AX Semantics software is configured to produce thousands of unique pieces of content. Most users subsequently publish the content on the web, with the aim of gaining visibility on Google, among other things. Therefore, there are various functions in the tool (sentence variants, synonyms, triggers, sentence sequences, etc.) to ensure variance and uniqueness. Fundamentally, this is how it works: After an initial configuration, you can use the software to generate unique and high-quality content based on structured data. You create one-time logics, content blocks and as needed variances for all possible "events". This way, evaluations, assessments and conclusions can be made in the text. Based on this and the information it finds in the data, the software forms content based on natural language. Then, each time content is regenerated, the software assembles the components into a new unique content, following the predefined rules.

Robert Weißgraeber

Robert Weissgraeber is Co-CEO and CTO at AX Semantics, where he heads up product development and engineering and is a managing director. Robert is an in-demand speaker and an author on topics including agile software development and natural language generation (NLG) technologies, a member of the Forbes Technology Council. He was previously Chief Product Officer at aexea and studied Chemistry at the Johannes Gutenberg University and did a research stint at Cornell University.